The Med School Example - Building a Better, Stronger, Faster Interview

Answer these questions as honestly as you can:

1. Is interviewing a highly effective part of your admission assessment process?

2. Is there a good rate of return on all the time allocated to it week after week?

3. Do the interview reports you review at the decision table effectively discriminate among your applicants? Do they really reveal effectively the noncognitive attributes you seek to understand better?

Garth Chalmers at the University of Toronto schools is familiar with these questions, and he used to worry that he couldn’t confidently answer them in the affirmative. Interviews, conducted individually, cost him and his staff a great deal of time week after week, and when it came time to sit down and make decisions, the committee found that the range of ratings varied little. When disappointing decisions were communicated, some families expressed concerns that the process wasn’t fair or thorough, or that their children didn’t receive a sympathetic ear from their interviewers.

Well, it’s worth $2000 annually, to be exact. That’s not cheap, but compared to the expense of the time your interview teams now spend interviewing individually, it may be a bargain. If only, he thought, there were a system and a process, established as valid and reliable by scientific study, which could dramatically reduce the time required for interviewing by the professional admission officers, provide more discriminating reports of applicant qualities, and be regarded as more professional, trustworthy, and fair by families. Such a tool might be worth thousands of dollars, Chalmers realized.

The system was developed first at McMaster University for medical school admission evaluation, then spread across Canada and is now used increasingly in U.S. medical schools such as Duke. It’s called the MMI: the Multiple Mini-Interview. McMaster had encountered a problem in the past: too many of its newly-enrolled students, despite high GPAs and test scores, weren’t flourishing in medical school. They carefully reviewed the factors that were enhancing success, and then used them to design and test an interview process composed of ten stations, each featuring a mini-interview thoroughly crafted to allow applicants to demonstrate particular character strengths such as communication, time management, ethics, cooperation, and negotiation. (The MMI is distributed by a company called ProFitHR.)

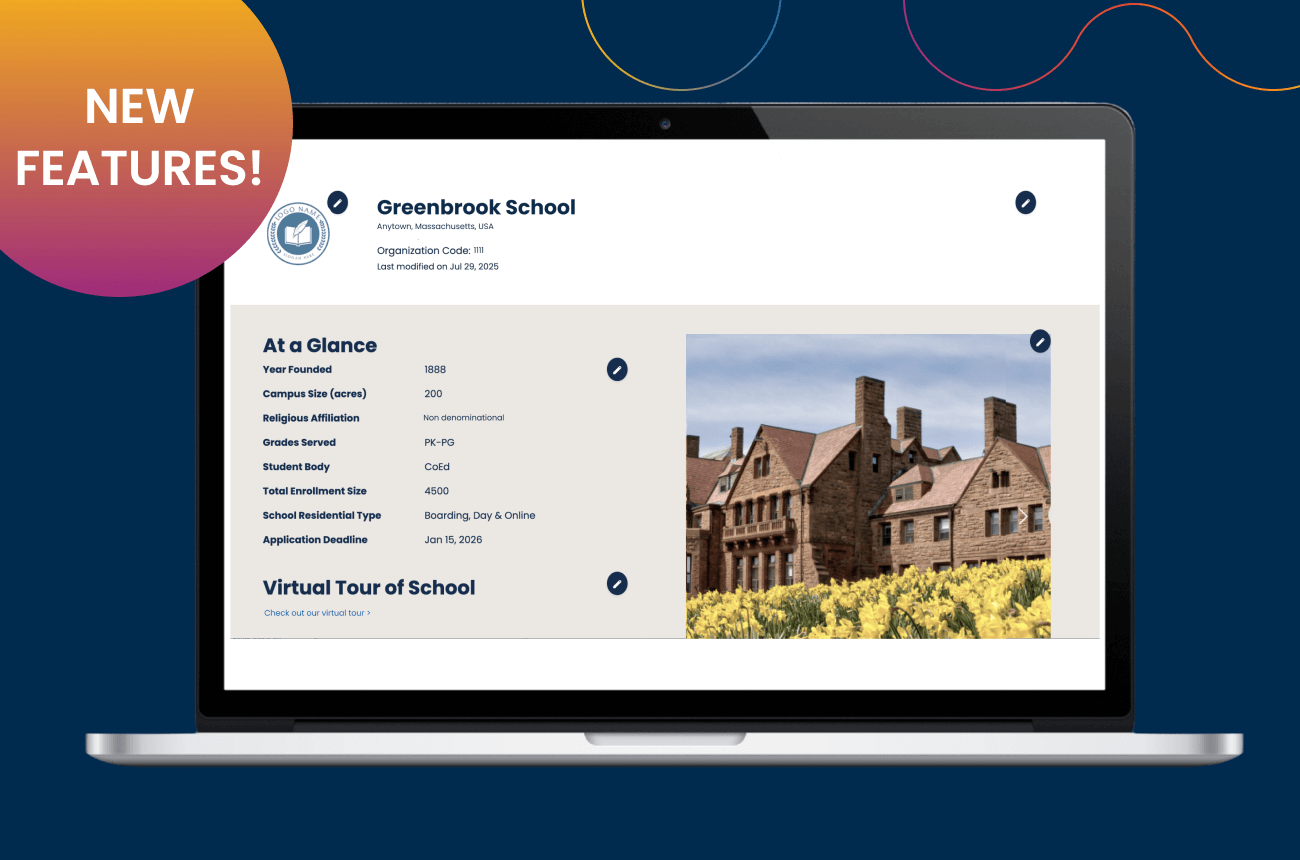

Each station is staffed by an interviewer trained (briefly, for an hour before the event), to administer and assess the applicant responses on just a single item and task. The MMI provides not only the items, but also a thorough discussion for the interviewer to review about what to look for in a quality answer, and a scale from 1-10 for rating the response. Students move through the six stations in a speed-dating type process, five minutes per station (one minute to read and reflect upon the prompt, and four to speak to it) and Chalmers says the kids like it and think it’s fun, by and large.University of Toronto Schools has been using the tool for four years, and finding it very effective. Using six stations instead of ten, with slightly modified questions for the sixth and eighth grade applicants he’s evaluating for admission to seventh and ninth grades, Chalmers finds the system far more informative and fair—and perceived as fair by his families.

UTS CampusChalmers uses the MMI items to reveal applicant attributes in communication, moral reasoning, logical reasoning, and self-reflection regarding character strengths generally; for admission selection, it should be noted, the committee largely restricts itself to the aggregate score, not the individual sections. Whereas in the individual interview approach the distribution of scoring in the interview was small - closely concentrated around 7.5 out of 10, Chalmers reports that MMI score averages distribute much more widely - between 3 and 8, with an average of 6/6.5. When evaluating interviewees by addressing just a single item, interviewers can put their focus exclusively on the quality of the response, and not fall into the trap of feeling like they’re “judging” the child as a whole, which usually softens the heart and inflates the rating. Ratings are independent, too; there’s no discussion of applicants among the interviewers before the ratings.

The event itself, conducted on one winter Saturday, is, he says, “a bit of a Gong Show, but we are finding it a very effective way to get everyone through the process.” With 200 children on a given day going through six stations, he uses 12 separate circuits to get it done, and needs nearly 80 adults to participate as interviewers! At first blush, of course, many schools will blanch: there’s no way. But at U. Toronto Schools, they draw in alumni for these days, welcoming 60 or 70 of them back to campus for lunch and an hour or two of interviewing. When he puts out the invite, Garth says he is “inundated with volunteers.” The advancement office believes it to be among the single most well attended and successful alumni events on their annual calendar. Because many younger alumni, college age or just beyond, are themselves thinking of applying to medical schools, they come back to participate because it is great preparation for their own interviewing. All in all, though it is lot to organize, it is a win-win situation for the school.

What does the research say? Chalmers hasn’t conducted his own research in any great depth, but anecdotally he is very confident that his high MMI scorers do go on to be outstanding students, and the converse is true as well, in his observation. In regards to medical school admission, multiple peer-reviewed journal research articles have found strong validity to the MMI. “Thus, the MMI showed incremental validity in combination with the other variables available at admission,” reported a 2012 issue of the JAMA: Journal of the American Medical Association.

Look at the cost benefit comparison found in a 2006 study in medical school admission!

When employing the MMI, there are other kinds of fringe benefits in addition to alumni engagement. The school has also used the same tool for job applicants, such as in a recent search they did for a new social worker—which many schools might find a fabulous repurposing of this technique.

Prospective parents respond well: “They like the level of commitment to the process demonstrated by the use of the MMI,” Chalmers asserts. “We turn away a lot of kids, and there really seems to be a lot less bitterness. People appreciate that our decision was made not by the subjective opinion of me and my team, but by use of a tool externally validated and used in medical school admission internationally.”

Certainly, this technique is far better suited to day schools; perhaps it is impossible to deploy in the boarding school context. But I wonder: how hard would it be for an ingenious school to use video-conferencing to replicate the process virtually? Worth considering.